Data

Abstract

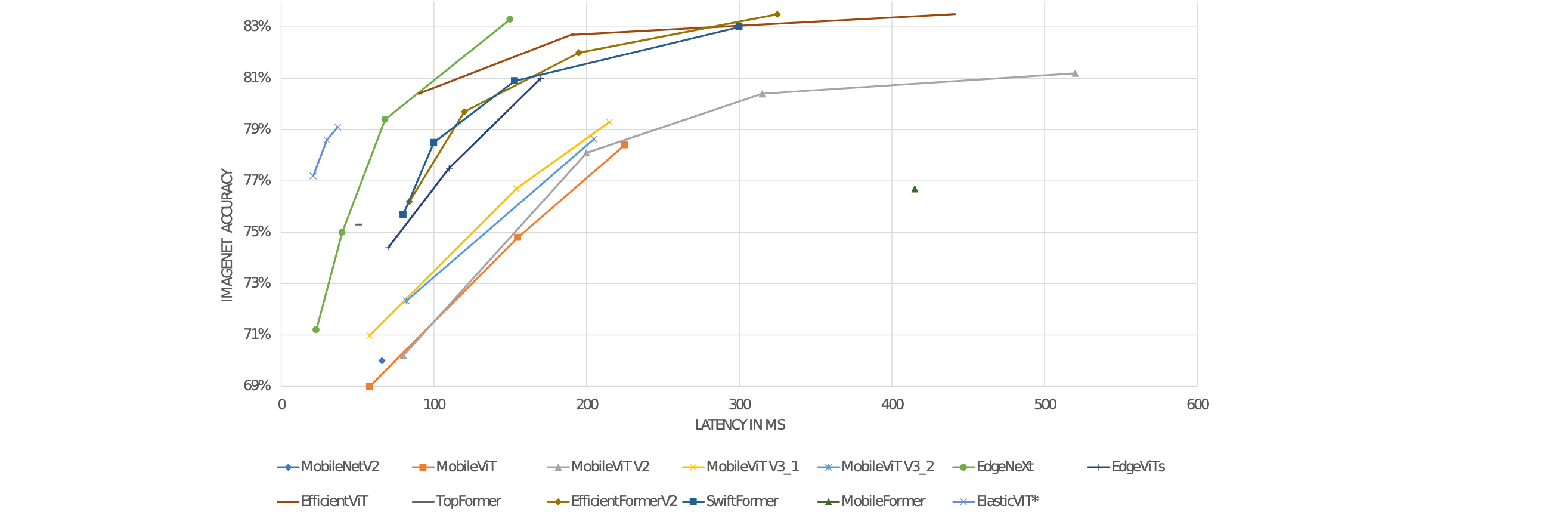

Since the adoption of convolutional neural networks for computer vision tasks researchers have strived to adapt them to resource limited hardware. Recently, transformers have shaken up the world of natural language processing and also promise to do so in computer vision. However, their strengths are yet to be determined. In this paper we evaluate the efficiency of the most popular mobile vision transformer models in terms of latency and accuracy on ImageNet-1k and optimise their performance on a real-time facial landmark estimation task.

Figure 2: Latency-accuracy comparison of mobile based architectures tested on a Google Pixel 4 using 256×256 images as input

Citation

Juan Castrillo and Roberto Valle and Luis Baumela. Efficiency Evaluation of Mobile Vision Transformers. Information Technology and Systems 933 (2024)

@inproceedings{Castrillo24,

author = {Juan Castrillo and Roberto Valle and Luis Baumela},

title = {Efficiency Evaluation of Mobile Vision Transformers},

booktitle = {Information Technology and Systems},

volume = {933},

year = {2024},

url = {https://doi.org/10.1007/978-3-031-54256-5_1}

}