Data

Abstract

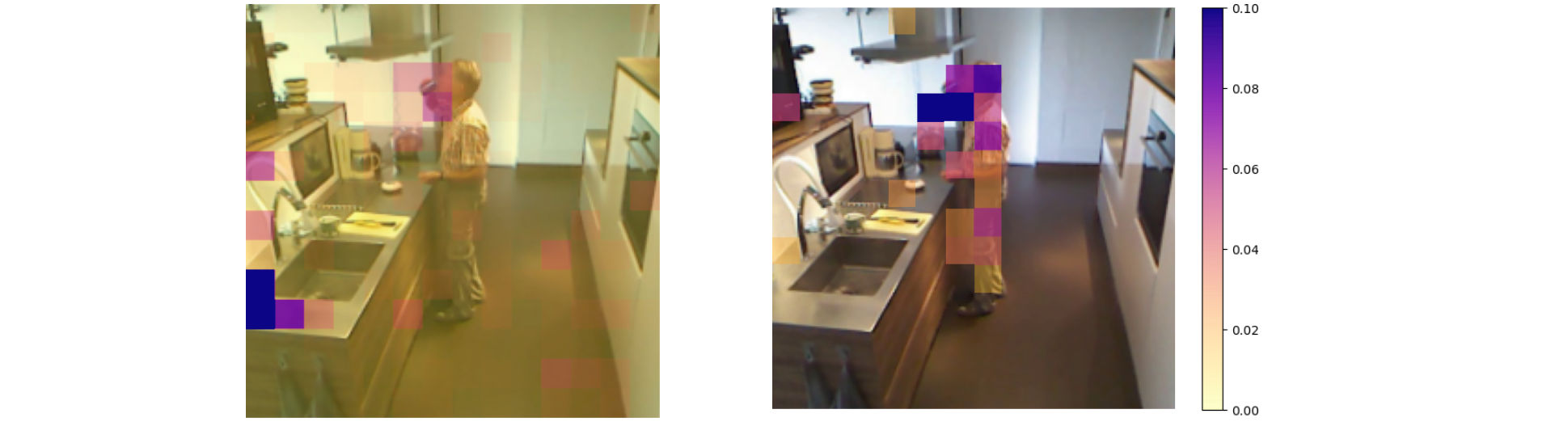

Large pre-trained video transformers are becoming the standard architecture for video processing due to their exceptional accuracy. However, their quadratic computational complexity has been a major obstacle to their practical application in problems that require the recognition of precise motion patterns in video, such as in the recognition of Activities of Daily Living (ADL). Techniques like token pruning help mitigate their computational cost, but overlook some specific aspects of this task such as the actor movement. To address this we propose an improved token selection method that integrates semantic information from the ADL recognition task with that of human motion. Our model relies on a multi-task architecture that infers human pose and activity classification from RGB images. We show that guiding token pruning with motion information significantly improves the trade-off between higher efficiency, obtained by reducing the number of tokens, and accuracy of the classification task. We evaluate our model on three popular ADL recognition benchmarks with their respective cross-subject and cross-view setups. In our experiments, a video transformer modified with our proposed modules sets a new state-of-the-art on the ADL recognition task whilst achieving significant reductions in computational cost.

Figure 1: Attention maps for the ‘Drink.Frombottle’ action on Toyota-Smarthome (CS)

Citation

Ricardo Pizarro and Roberto Valle and José Miguel Buenaposada and Luis Miguel Bergasa and Luis Baumela. Pose-guided token selection for the recognition of activities of daily living. Image Vis. Comput. (2025)

@article{Pizarro25,

author = {Ricardo Pizarro and Roberto Valle and Jos{\'{e}} Miguel Buenaposada and Luis Miguel Bergasa and Luis Baumela},

title = {Pose-guided token selection for the recognition of activities of daily living},

journal = {Image Vis. Comput.},

year = {2025},

url = {https://doi.org/10.1016/j.imavis.2025.105686}

}