Data

Abstract

Deepfake detection has progressively become a topic of interest in recent years due to the proliferation of automated facial forgery generation techniques that are able to produce manipulated media indistinguishable for the human eye. One of the most difficult aspects in deepfake detection is generalization to unseen manipulation techniques, which is a key factor to make a method useful in real world applications. In this paper, we propose a new multi-task network termed SFA, which leverages spatiotemporal features extracted from video inputs to provide more robust predictions compared to image-only models, as well as a face alignment task that helps the network to identify anomalous facial movements in the temporal dimension. We show that this multi-task approach improves generalization compared to the single-task baseline, and succeeds in producing results on par with the current state-of-the-art using different cross-dataset and cross-manipulation benchmarks.

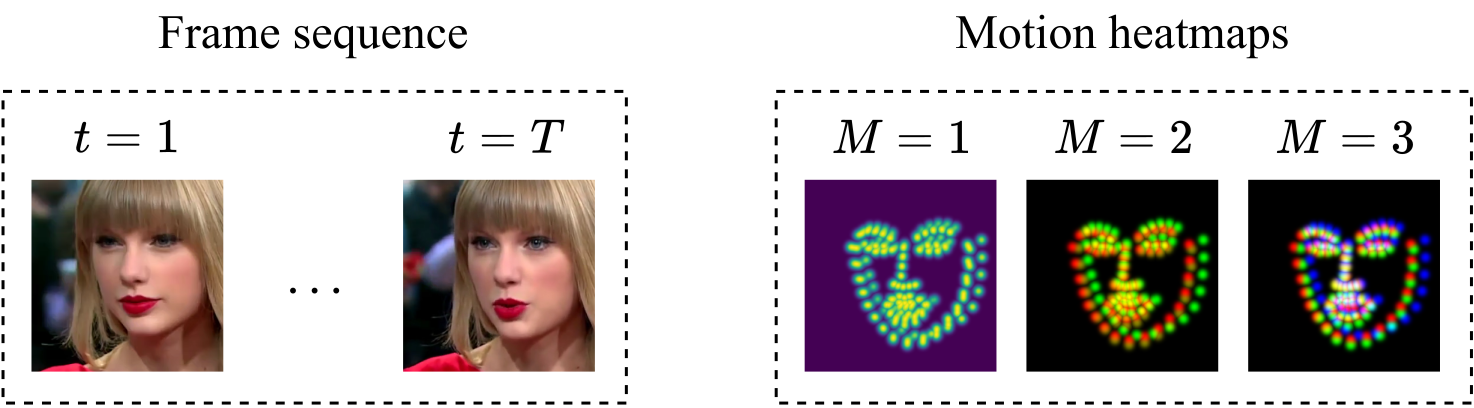

Figure 2: Ground-truth motion heatmap for different number of channels

Citation

Alejandro Cobo and Roberto Valle and José Miguel Buenaposada and Luis Baumela. Spatiotemporal Face Alignment for Generalizable Deepfake Detection. International Conference on Automatic Face and Gesture Recognition (2025)

@inproceedings{Cobo25,

author = {Alejandro Cobo and Roberto Valle and Jos{\'{e}} Miguel Buenaposada and Luis Baumela},

title = {Spatiotemporal Face Alignment for Generalizable Deepfake Detection},

booktitle = {International Conference on Automatic Face and Gesture Recognition},

year = {2025},

url = {https://doi.org/10.1109/FG61629.2025.11099346}

}